Caching Strategies

In the fast-paced world of technology, the design of the system is the backbone of every scalable application. It is the very thing that assures your app can take growth, manage user demand, and serve them content efficiently. One of the secret sauces to achieve that is Caching—a concept that might sound too simple at first, but definitely very powerful when used wisely. Today, it's down into the world of caching strategies—a topic usually taken for granted, but one that may make or break the system performance.

Understanding Caching

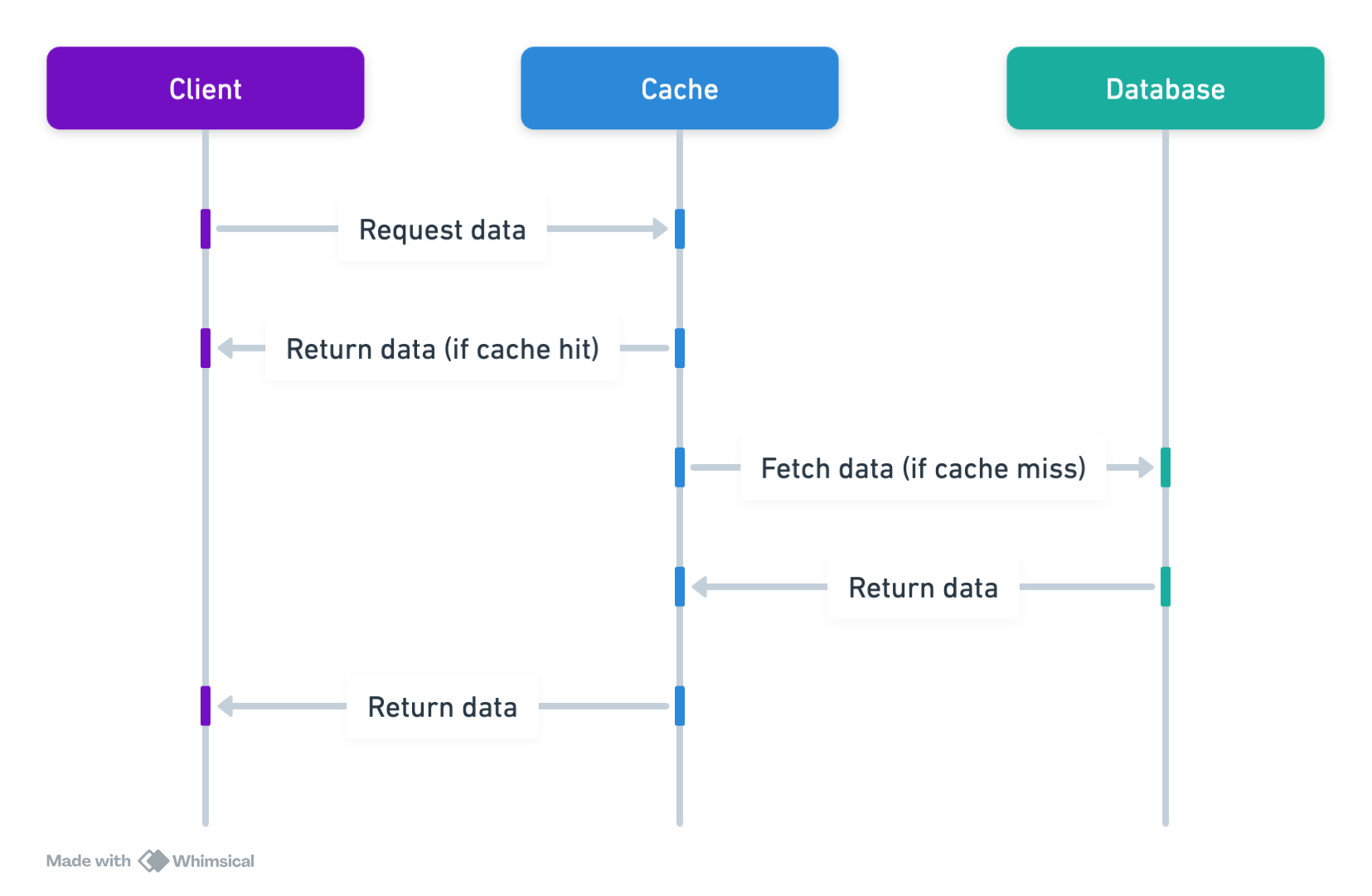

Basically, caching is a kind of fast memory store where you can easily fetch the things you're going to need many times over, in contrast to searching through the colossal storeroom that is your database. It is a mechanism where some files or data copies are stored temporarily to a location that has relatively fast access; hence, it lessens the need for a repetitive query to the database, of course, considerably hastening the time of a response.

Various types of caching are used to serve different necessities. Local caching keeps data close to an application, for the most part, within the same memory space. The caching distribution reaches across servers, making it ideal for large-scale applications to ensure the data is close by—no matter where your users are. Then there is the Content Delivery Network (CDN), which caches static web content all over the world, closer to users in an attempt to shave off time taken in loading pages.

Caching Strategies

Cache Aside (Lazy Loading)

This strategy waits for data to be requested before it caches it. Imagine you're looking for a book in a library. If it is not on the shelf (cache), you ask for it from the storage room (database), read it, then put it back on the shelf for faster access next time. It's great because it avoids filling your cache with unnecessary data, but it means the first request is slower.

Write Through

You may think of that as the moment the library gets the book, and it instantly puts it on the shelf. Any time there's a change to the data in the database, at that same instant, it's also written to the cache. This keeps the cache fresh but can slow down write operations because you're updating two places at once.

Write Behind (Write Back)

In this case, data is first written into the cache, then the database after some very short time. It is akin to writing a note to yourself to put a new book on the shelf, and then literally following that same request once you are able to find a free moment. This can make the write operations fast, but there could be a risk of losing data if it fails before it syncs with the database.

Refresh Ahead

This zealous friend anticipates when the data will be needed and refills it in the cache before being requested. It is almost like knowing when the cookbook with which you are going to cook Thanksgiving dinner was necessary and ensuring that it is on the shelf ready to go. Great for data that's accessed on a predictable schedule but requires some smart algorithms to anticipate those needs.

Implementation Considerations

It is thus very important to set the right cache size and the policy of eviction (such as LRU, LFU, LIFO, FIFO) that will ensure resource usage is optimum, and performance at best. Consistency should also be maintained between your cache and the database to avoid serving stale or wrong data. And when caching sensitive information, security becomes a paramount concern to prevent unauthorized access.

Setting a time-to-live (TTL) value for cached data helps manage stale data, while cache invalidation strategies keep your cache fresh. Cache tags and namespaces offer a sophisticated way to manage and invalidate related data sets. Predictive caching, sometimes with the help of machine learning, can dynamically adjust what's cached based on user behavior, making your application even faster and more efficient.

Least Recently Used (LRU)

A well-known caching policy stands for "Least Recently Used." Let's view the stack of books, where every time you read a book, you put that book on the top of the stack. If the stack crosses its limit, then it starts removing the books from the bottom that were read a long time ago to give the stack space. LRU would work fine when the case is such that, in its greatest probability, recent data are more likely to be accessed again, and we need the cache to keep the value for a recent usage pattern.

Least Frequently Used (LFU)

LFU takes a more analytical approach by tracking how often items are accessed. The ones least accessed are evicted first. It's easiest to compare this approach to a library, where books will be thrown away if they have not been checked out at some minimal frequency. Thus, LFU can perform better than LRU in those cases in which the access patterns are less of recency and more of frequency, such that it may happen that the frequency data is occupying the cache despite not being recently used.

First In, First Out (FIFO)

This is a very simple eviction of objects policy: the first objects placed in the cache are first out of the cache, regardless of their access patterns. This is similar to the row at the grocery shop, where service is accorded on a first-come, first-served basis. It is very simple and clear how to do this, easy to understand and easy to implement with FIFO heuristics, although in some caching scenarios, such as if the older data continued to be relevant or frequent access, this would often not be

Last In, First Out (LIFO)

LIFO would dispose of items added in the most recent order, as would be a stack of plates where the last added is the first one to be taken.

Though not rational for caching since it might take out the ones recently used, there are niche scenarios where LIFO could be better, most especially in applications where the most recent data is also quickly outdated or replaced.

Content Delivery Networks (CDNs)

Content Delivery Networks (CDNs) are not just an optional enhancement but have become an indispensable asset for high-traffic websites and platforms. In today's digital landscape, where milliseconds can impact user engagement and retention, most of the renowned and heavily visited websites rely extensively on CDNs. This widespread adoption underscores the critical role CDNs play in ensuring content is delivered with minimal delay, no matter where the user is located. By caching content across a network of global servers, CDNs help these platforms manage large volumes of traffic efficiently, maintaining performance and reliability at scale. This reliance on CDNs reflects the growing need for speed and consistency in content delivery, essential for keeping users engaged in our fast-paced online world.

Conclusion

Caching might seem like just another component in the vast realm of system design, but its impact on performance is monumental. By understanding and implementing the right caching strategies, you can ensure your application remains swift and scalable, no matter how much it grows. So, consider this your nudge to explore the potential of caching and tailor it to the unique needs of your system.