LLM Sandbox: One Year Journey to 100k Downloads 🎉

Hey everyone! 👋

You know that feeling when you're wrestling with a problem at 2 AM, and every existing solution feels like it was designed to make your life harder? That's exactly where I found myself a year ago.

I was knee-deep in building LLM applications, desperately trying to add ChatGPT Code Interpreter-like functionality. The existing options? Either ridiculously complex to set up or they wanted me to pay cloud credits for every tiny script execution. As a developer who likes to tinker locally and keep costs reasonable, I was getting pretty frustrated.

So I did what any stubborn developer would do - I built my own solution. Something simple, something that would "just work" on my laptop without requiring a PhD in DevOps.

Fast forward to today, and I'm honestly blown away. LLM Sandbox has reached over 100,000 downloads on PyPI! 🚀

I'll be honest - when I first pushed that initial commit, I never imagined it would grow into something that many of developers rely on.

From Simple to Sophisticated: What's New

Special thanks to everyone who's opened issues, provided feedback, and helped make LLM Sandbox more robust. Your contributions have shaped this project into what it is today.

🏗️ Multi-Backend Architecture

What started as a Docker-only solution has evolved into a flexible platform supporting multiple container backends:

# Docker (classic)

with SandboxSession(backend="docker", lang="python") as session:

result = session.run("print('Hello from Docker!')")

# Kubernetes (enterprise-scale)

with SandboxSession(backend="kubernetes", lang="python") as session:

result = session.run("print('Hello from Kubernetes!')")

# Podman (rootless security)

with SandboxSession(backend="podman", lang="python") as session:

result = session.run("print('Hello from Podman!')")

🛡️ Security-First Approach

The new security framework allows you to define custom policies:

from llm_sandbox import SandboxSession

from llm_sandbox.security import SecurityPolicy, RestrictedModule, SecurityIssueSeverity

# Create a simple policy

policy = SecurityPolicy(

severity_threshold=SecurityIssueSeverity.MEDIUM,

restricted_modules=[

RestrictedModule(

name="os",

description="Operating system interface",

severity=SecurityIssueSeverity.HIGH

),

RestrictedModule(

name="subprocess",

description="Process execution",

severity=SecurityIssueSeverity.HIGH

)

]

)

with SandboxSession(lang="python", security_policy=policy) as session:

# Check if code is safe before execution

code = "import os\nos.system('ls')"

is_safe, violations = session.is_safe(code)

if is_safe:

result = session.run(code)

print(result.stdout)

else:

print("Code failed security check:")

for violation in violations:

print(f" - {violation.description} (Severity: {violation.severity.name})")

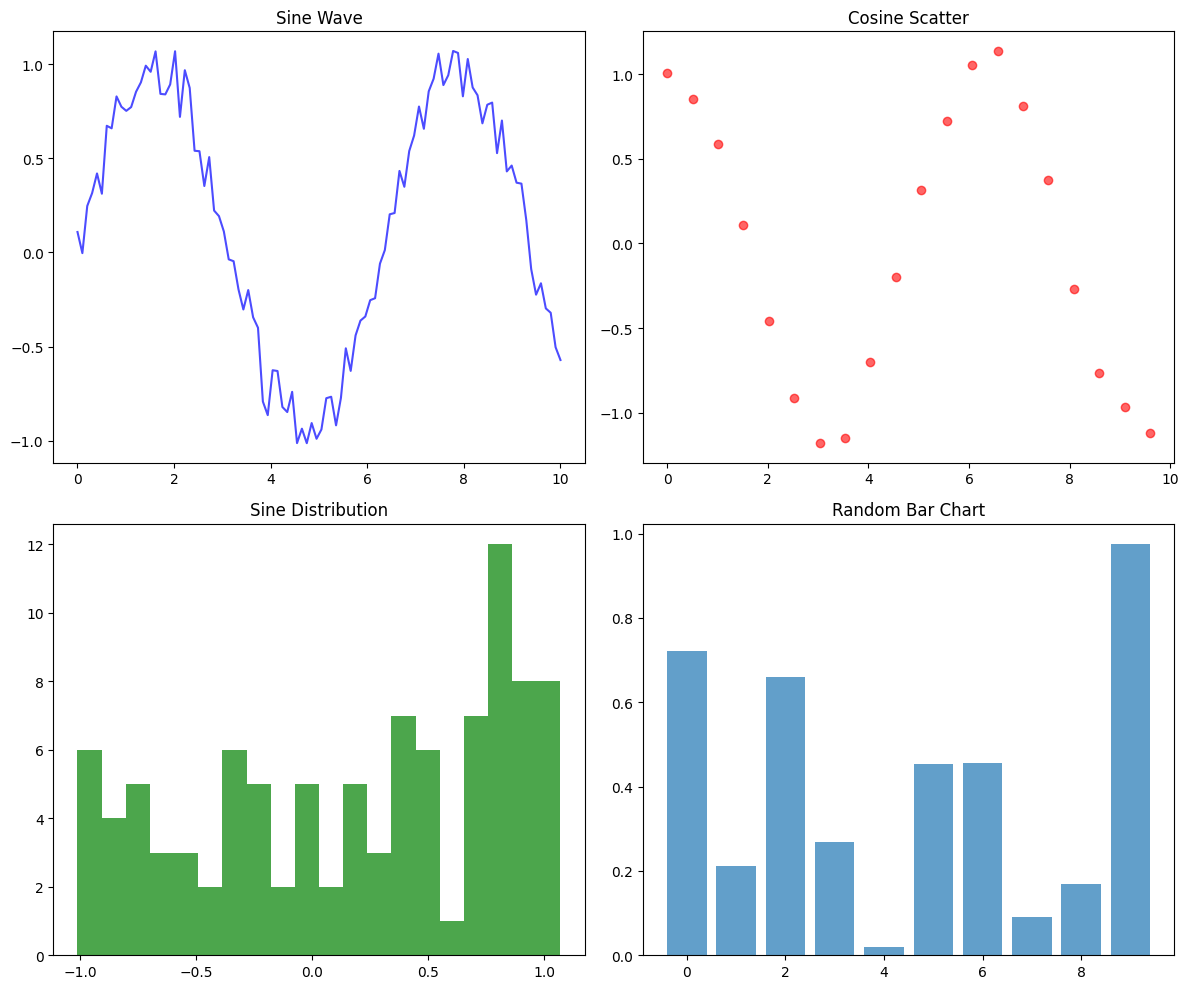

📊 Automatic Artifact Extraction

One of the most useful features - automatic capture of plots and visualizations:

import base64

from pathlib import Path

from llm_sandbox import ArtifactSandboxSession

code = """

import matplotlib.pyplot as plt

import numpy as np

plt.style.use('default')

# Generate data

x = np.linspace(0, 10, 100)

y1 = np.sin(x) + np.random.normal(0, 0.1, 100)

y2 = np.cos(x) + np.random.normal(0, 0.1, 100)

# Create plot

fig, axes = plt.subplots(2, 2, figsize=(12, 10))

axes[0, 0].plot(x, y1, 'b-', alpha=0.7)

axes[0, 0].set_title('Sine Wave')

axes[0, 1].scatter(x[::5], y2[::5], c='red', alpha=0.6)

axes[0, 1].set_title('Cosine Scatter')

axes[1, 0].hist(y1, bins=20, alpha=0.7, color='green')

axes[1, 0].set_title('Sine Distribution')

axes[1, 1].bar(range(10), np.random.rand(10), alpha=0.7)

axes[1, 1].set_title('Random Bar Chart')

plt.tight_layout()

plt.show()

print('Plot generated successfully!')

"""

# Create a sandbox session

with ArtifactSandboxSession(lang="python", verbose=True) as session:

# Run Python code safely

result = session.run(code)

print(result.stdout) # Output: Plot generated successfully!

for plot in result.plots:

with Path("docs/assets/example.png").open("wb") as f:

f.write(base64.b64decode(plot.content_base64))

🔗 Container Reusability

Our latest feature allows you to connect to existing containers - perfect for complex environments:

# Connect to a pre-configured container

with SandboxSession(container_id='my-existing-container', lang='python') as session:

result = session.run("import tensorflow as tf; print(tf.__version__)")

Looking Forward: What's Next

🍎 Apple Container Backend Support

Apple dropped their containerization bombshell at WWDC 2025, and we're all over it. VM-level isolation on Apple Silicon? Yes, please. This could be a game-changer for Mac developers who want the best of both worlds - security and performance.

🔌 Model Context Protocol (MCP) Server

Direct Claude Desktop, ChatGPT, or Cursor integration is coming! Imagine running code directly from Claude's interface with full sandbox security. We're working with Anthropic's MCP standard to make this seamless.

🌐 REST API Server

"Can I use this from my Go application?" "What about Node.js?" "Java?"

I hear you! A language-agnostic HTTP API is in the works so everyone can join the party, not just us Python folks.

📊 Interactive Python Kernel

Jupyter-style persistent execution is coming. Variables will stick around between runs, making multi-step data analysis actually pleasant. No more redefining your DataFrame fifty times.

🛡️ Security Presets

"Development", "Production", "Fort Knox" - pick your security level and we'll handle the details. Because configuring security policies shouldn't require a cybersecurity degree.

⚡ Firecracker Backend

AWS's Firecracker for microsecond-fast VM startup? Yeah, we're going there. Maximum isolation with minimal overhead.

Getting Started Today

If you haven't tried LLM Sandbox yet, it's never been easier:

# The basics

pip install llm-sandbox

# Pick your flavor

pip install 'llm-sandbox[docker]' # Most people start here

pip install 'llm-sandbox[k8s]' # Enterprise vibes

pip install 'llm-sandbox[podman]' # Security first

from llm_sandbox import SandboxSession

# Your first sandbox (it's that simple)

with SandboxSession(lang="python") as session:

result = session.run("""

print("Welcome to LLM Sandbox!")

print("Where AI-generated code runs safely.")

""")

print(result.stdout)

Join Our Community

- 🌟 Star us on GitHub: vndee/llm-sandbox

- 📖 Read our docs: vndee.github.io/llm-sandbox

- 💬 Join discussions: GitHub Discussions

- 🐛 Report issues: GitHub Issues

A Heartfelt Thank You

To everyone who's been part of this journey - whether you downloaded the package, starred the repo, reported a bug, or just spread the word - thank you. You've made this incredible milestone possible.

P.S. If you build something cool with LLM Sandbox, I genuinely want to hear about it. Seriously. Drop me a line - success stories fuel my motivation more than coffee (and that's saying something).